Andreas Rössler

ID-Reveal: Identity-aware DeepFake Video Detection

Dec 04, 2020

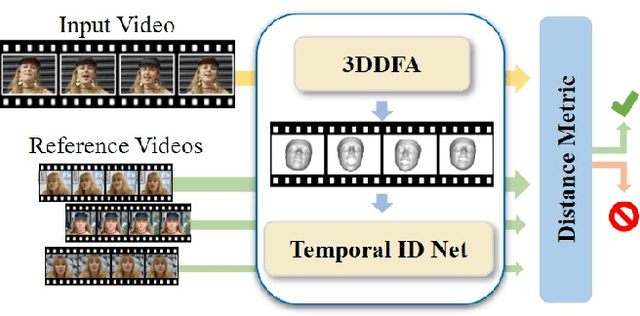

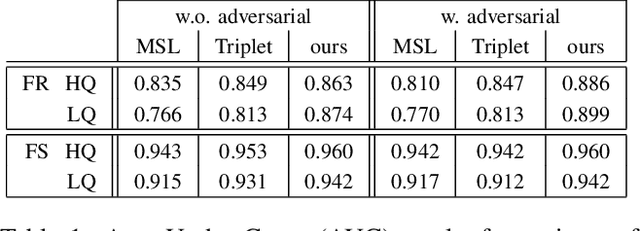

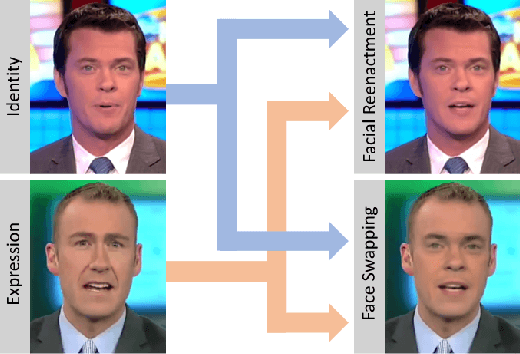

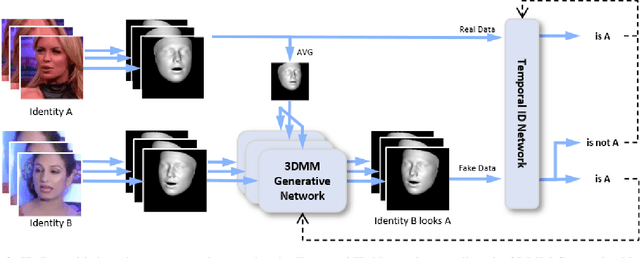

Abstract:State-of-the-art DeepFake forgery detectors are trained in a supervised fashion to answer the question 'is this video real or fake?'. Given that their training is typically method-specific, these approaches show poor generalization across different types of facial manipulations, e.g., face swapping or facial reenactment. In this work, we look at the problem from a different perspective by focusing on the facial characteristics of a specific identity; i.e., we want to answer the question 'Is this the person who is claimed to be?'. To this end, we introduce ID-Reveal, a new approach that learns temporal facial features, specific of how each person moves while talking, by means of metric learning coupled with an adversarial training strategy. Our method is independent of the specific type of manipulation since it is trained only on real videos. Moreover, relying on high-level semantic features, it is robust to widespread and disruptive forms of post-processing. We performed a thorough experimental analysis on several publicly available benchmarks, such as FaceForensics++, Google's DFD, and Celeb-DF. Compared to state of the art, our method improves generalization and is more robust to low-quality videos, that are usually spread over social networks. In particular, we obtain an average improvement of more than 15% in terms of accuracy for facial reenactment on high compressed videos.

SpoC: Spoofing Camera Fingerprints

Nov 27, 2019

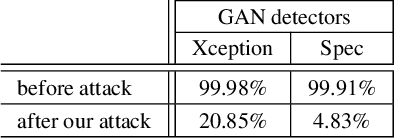

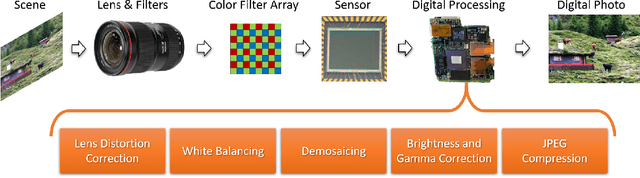

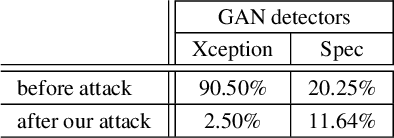

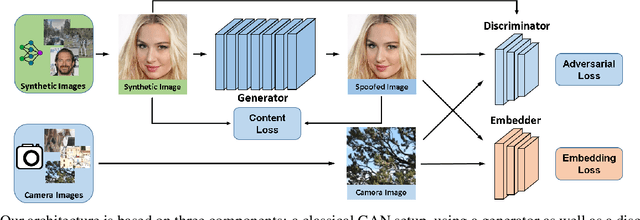

Abstract:Thanks to the fast progress in synthetic media generation, creating realistic false images has become very easy. Such images can be used to wrap rich fake news with enhanced credibility, spawning a new wave of high-impact, high-risk misinformation campaigns. Therefore, there is a fast-growing interest in reliable detectors of manipulated media. The most powerful detectors, to date, rely on the subtle traces left by any device on all images acquired by it. In particular, due to proprietary in-camera processes, like demosaicing or compression, each camera model leaves trademark traces that can be exploited for forensic analyses. The absence or distortion of such traces in the target image is a strong hint of manipulation. In this paper, we challenge such detectors to gain better insight into their vulnerabilities. This is an important study in order to build better forgery detectors able to face malicious attacks. Our proposal consists of a GAN-based approach that injects camera traces into synthetic images. Given a GANgenerated image, we insert the traces of a specific camera model into it and deceive state-of-the-art detectors into believing the image was acquired by that model. Likewise, we deceive independent detectors of synthetic GAN images into believing the image is real. Experiments prove the effectiveness of the proposed method in a wide array of conditions. Moreover, no prior information on the attacked detectors is needed, but only sample images from the target camera.

FaceForensics++: Learning to Detect Manipulated Facial Images

Jan 25, 2019

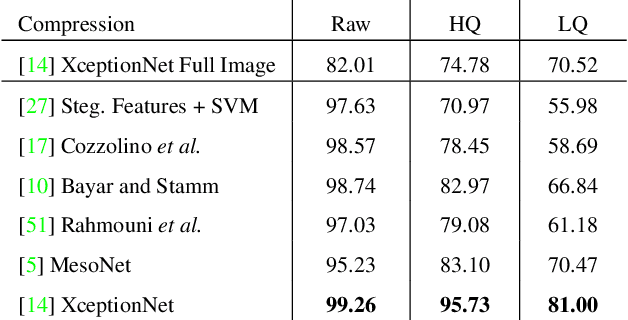

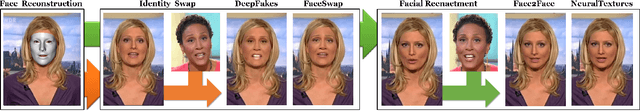

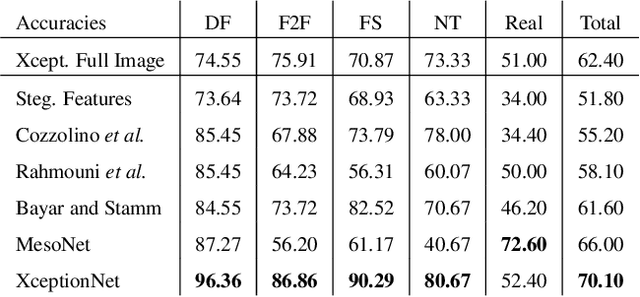

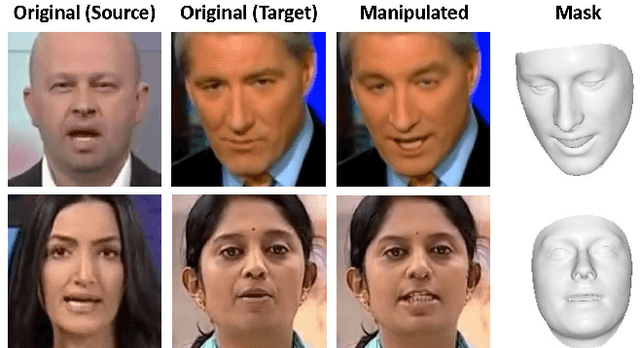

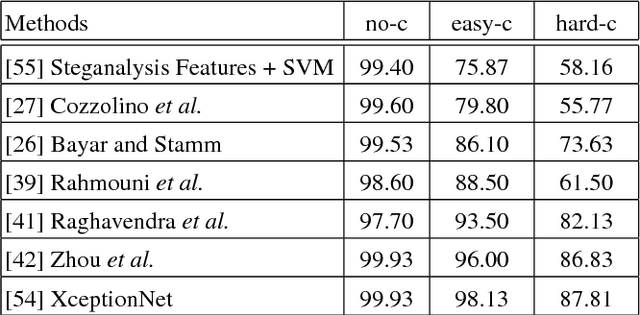

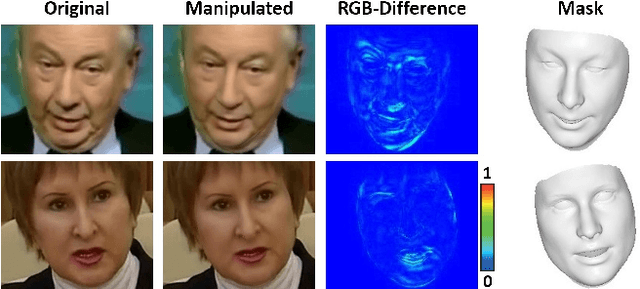

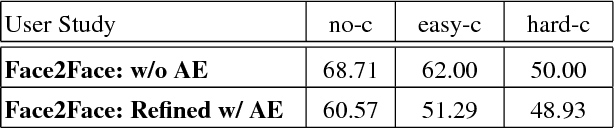

Abstract:The rapid progress in synthetic image generation and manipulation has now come to a point where it raises significant concerns on the implication on the society. At best, this leads to a loss of trust in digital content, but it might even cause further harm by spreading false information and the creation of fake news. In this paper, we examine the realism of state-of-the-art image manipulations, and how difficult it is to detect them - either automatically or by humans. In particular, we focus on DeepFakes, Face2Face, and FaceSwap as prominent representatives for facial manipulations. We create more than half a million manipulated images respectively for each approach. The resulting publicly available dataset is at least an order of magnitude larger than comparable alternatives and it enables us to train data-driven forgery detectors in a supervised fashion. We show that the use of additional domain specific knowledge improves forgery detection to an unprecedented accuracy, even in the presence of strong compression. By conducting a series of thorough experiments, we quantify the differences between classical approaches, novel deep learning approaches, and the performance of human observers.

ForensicTransfer: Weakly-supervised Domain Adaptation for Forgery Detection

Dec 06, 2018

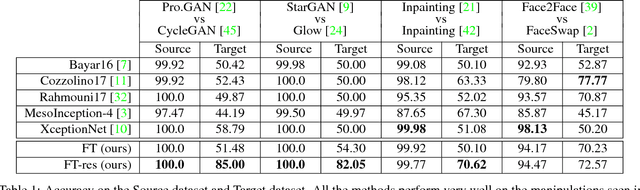

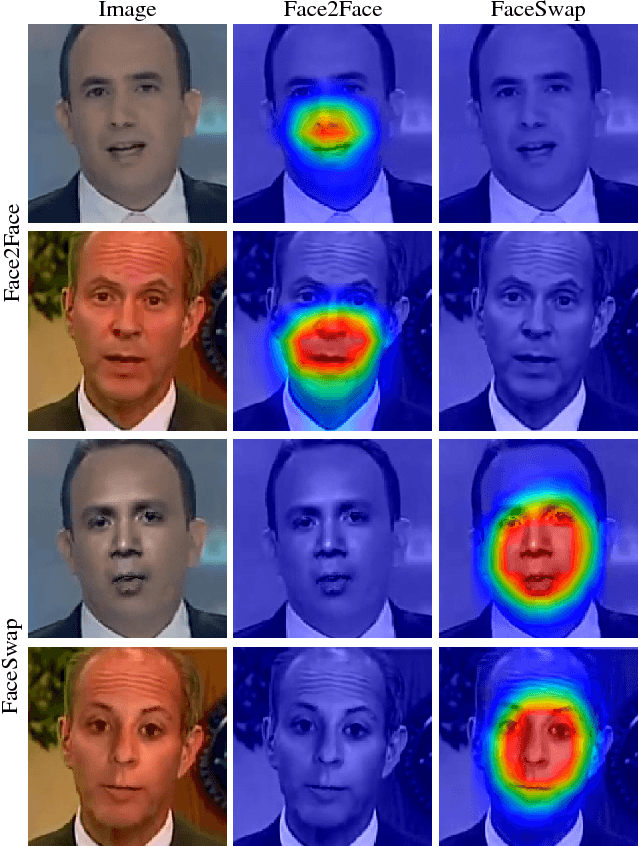

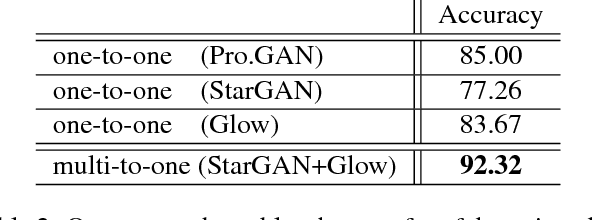

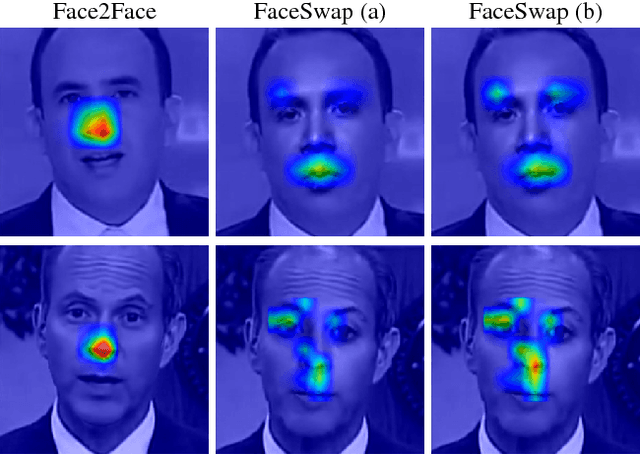

Abstract:Distinguishing fakes from real images is becoming increasingly difficult as new sophisticated image manipulation approaches come out by the day. Convolutional neural networks (CNN) show excellent performance in detecting image manipulations when they are trained on a specific forgery method. However, on examples from unseen manipulation approaches, their performance drops significantly. To address this limitation in transferability, we introduce ForensicTransfer. ForensicTransfer tackles two challenges in multimedia forensics. First, we devise a learning-based forensic detector which adapts well to new domains, i.e., novel manipulation methods. Second we handle scenarios where only a handful of fake examples are available during training. To this end, we learn a forensic embedding that can be used to distinguish between real and fake imagery. We are using a new autoencoder-based architecture which enforces activations in different parts of a latent vector for the real and fake classes. Together with the constraint of correct reconstruction this ensures that the latent space keeps all the relevant information about the nature of the image. Therefore, the learned embedding acts as a form of anomaly detector; namely, an image manipulated from an unseen method will be detected as fake provided it maps sufficiently far away from the cluster of real images. Comparing with prior works, ForensicTransfer shows significant improvements in transferability, which we demonstrate in a series of experiments on cutting-edge benchmarks. For instance, on unseen examples, we achieve up to 80-85% in terms of accuracy compared to 50-59%, and with only a handful of seen examples, our performance already reaches around 95%.

FaceForensics: A Large-scale Video Dataset for Forgery Detection in Human Faces

Mar 24, 2018

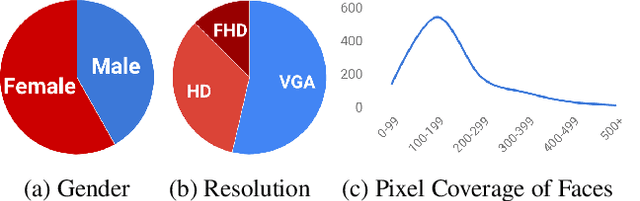

Abstract:With recent advances in computer vision and graphics, it is now possible to generate videos with extremely realistic synthetic faces, even in real time. Countless applications are possible, some of which raise a legitimate alarm, calling for reliable detectors of fake videos. In fact, distinguishing between original and manipulated video can be a challenge for humans and computers alike, especially when the videos are compressed or have low resolution, as it often happens on social networks. Research on the detection of face manipulations has been seriously hampered by the lack of adequate datasets. To this end, we introduce a novel face manipulation dataset of about half a million edited images (from over 1000 videos). The manipulations have been generated with a state-of-the-art face editing approach. It exceeds all existing video manipulation datasets by at least an order of magnitude. Using our new dataset, we introduce benchmarks for classical image forensic tasks, including classification and segmentation, considering videos compressed at various quality levels. In addition, we introduce a benchmark evaluation for creating indistinguishable forgeries with known ground truth; for instance with generative refinement models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge